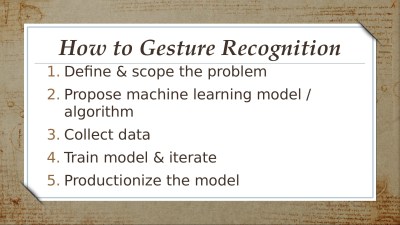

Jennifer Wang likes to dress up for cosplay and she’s a Harry Potter fan. Her wizarding skills are technological rather than magical but to the casual observer she’s managed to blur those lines. Having a lot of experience with different sensors, she decided to fuse all of this together to make a magic wand. The wand contains an inertial measurement unit (IMU) so it can detect gestures. Instead of hardcoding everything [Jennifer] used machine learning and presented her results at the Hackaday Superconference. Didn’t make it to Supercon? No worries, you can watch her talk on building IMU-based gesture recognition below, and grab the code from GitHub.

Naturally, we enjoyed seeing the technology parts of her project, and this is a great primer on applying machine learning to sensor data. But what we thought was really insightful was the discussions about the entire design lifecycle. Asking questions to scope the design space such as how much money can you spend, who will use the device, and where you will use it are often things we subconsciously answer but don’t make explicit. Failing to answer these questions at all increases the risk your project will fail or, at least, not be as successful as it could have been.

Balance

Another theme to the talk: design trades. You have to balance what you want vs other project realities like speed, power consumption, and cost. In this case, [Jennifer] points out that a machine learning system can be accurate, memory efficient, or low-latency and she contends you can only pick two. That is, a small and fast model might not be accurate. An accurate model that is fast will probably use a lot of memory, and so on.

Another theme to the talk: design trades. You have to balance what you want vs other project realities like speed, power consumption, and cost. In this case, [Jennifer] points out that a machine learning system can be accurate, memory efficient, or low-latency and she contends you can only pick two. That is, a small and fast model might not be accurate. An accurate model that is fast will probably use a lot of memory, and so on.

Between the requirements and the trades, she takes you through how she arrived at the architecture for the wand which could then actually be designed and built. For example, a camera can recognize gestures, but not in the dark. The final design incorporates a BNO055 IMU, a Raspberry Pi Zero W, an audio system, and, of course, power.

The next trade involved using deep learning versus more traditional approaches. To make that decision, she looked at what each technique would make easier vs what it would make harder. For example, deep learning doesn’t require as much domain knowledge but is harder to debug and requires a lot of data to train well. A signal processing approach is easier to debug but requires more domain knowledge.

The final approach was to collect lots of data and then deep learn a model from that data. An important element here is coverage. That is, making sure the data covers all the expected use cases. The first iteration of the model did not work well, but after the third attempt, things started to fall into place.

Reduced to Practice

The practical side of the software used Python with some numerical libraries and scikit-learn to handle the deep learning aspects. Plot.ly and Jupyter Notebook also provided tools that were handy.

The practical side of the software used Python with some numerical libraries and scikit-learn to handle the deep learning aspects. Plot.ly and Jupyter Notebook also provided tools that were handy.

While a magic wand might not seem very practical, the end of the presentation talks about other things the technology might accomplish like earthquake detection or collecting census data. It could even detect when someone has fallen.

The real value, though, is the design process. As she points out, those same steps — defining requirements, and working through design trades — are important for every project. Sure, sometimes a project is so small that you intuitively do them without thinking. But as projects get bigger, having a process to make sure you’ve covered your needs and made choices consistent with your goals and constraints can be a big help in making sure a project is successful.

No comments:

Post a Comment