Technology frequently looks at nature to make improvements in efficiency, and we may be nearing a new breakthrough in copying how nature stores data. Maybe some day your thumb drive will be your actual thumb. The entire works of Shakespeare could be stored in an infinite number of monkeys. DNA could become a data storage mechanism! With all the sensationalism surrounding this frontier, it seems like a dose of reality is in order.

The Potential for Greatness

The human genome, with 3 billion base pairs can store up to 750MB of data. In reality every cell has two pairs of chromosomes, so nearly every human cell has 1.5GB of data shoved inside. You could pack 165 billion cells into the volume of a microSD card, which equates to 165 exobytes, and that’s if you keep all the overhead of the rest of the cell and not just the DNA. That’s without any kind of optimizing for data storage, too.

This kind of data density is far beyond our current digital storage capabilities. Storing nearly infinite data onto extremely small cells could change everything. Beyond the volume, there’s also the promise of longevity and replication, maintaining a permanent record that can’t get lost and is easily transferred (like medical records), and even an element of subterfuge or data transportation, as well as the ability to design self-replicating machines whose purpose is to disseminate information broadly.

So, where is the state of the art in DNA data storage? There’s plenty of promise, but does it actually work?

The Nature of DNA

We’ve been taught that DNA is the blueprint of life, and that information about how cells are made and interact is encoded in the nucleotides of Adenine, Thymine, Guanine, and Cytosine, held together with their complement in a long chain. When information needs to be gleaned from this database, enzymes pull apart the chain along its length, make a copy of half in RNA, then transfer the RNA to a ribosome where the RNA is mirror copied into the appropriate protein. Think of how powerful that is. Essentially every cell contains the mechanisms needed for reading and writing data.

We’ve been taught that DNA is the blueprint of life, and that information about how cells are made and interact is encoded in the nucleotides of Adenine, Thymine, Guanine, and Cytosine, held together with their complement in a long chain. When information needs to be gleaned from this database, enzymes pull apart the chain along its length, make a copy of half in RNA, then transfer the RNA to a ribosome where the RNA is mirror copied into the appropriate protein. Think of how powerful that is. Essentially every cell contains the mechanisms needed for reading and writing data.

There’s even a mechanism for data integrity. We have multiple chromosomes because if the strands get too long they break in the wrong places, so splitting them up makes sure this doesn’t happen. When the cell divides, the whole chromosomes split in half, and then nucleotides that pair with the half-chain combine with the strand to make two complete copies.

Using DNA Like a Machine

The way we do it with machines is different. First, the idea that each nucleotide can hold two bits of information doesn’t work. It turns out that some sequences don’t work well and are prone to errors or breaking. In addition, some overhead is required to mark starts and stops and indices. Second, the methods for reading and writing require LOTS of copies. The process involves many amplification steps to generate enough copies of the data that will be pulled apart and analyzed in bulk. The gene science community has made leaps and bounds in the last two decades since the start of the Human Genome Project and the discovery of techniques to rapidly sequence DNA, but it still has a long way to go.

The way we do it with machines is different. First, the idea that each nucleotide can hold two bits of information doesn’t work. It turns out that some sequences don’t work well and are prone to errors or breaking. In addition, some overhead is required to mark starts and stops and indices. Second, the methods for reading and writing require LOTS of copies. The process involves many amplification steps to generate enough copies of the data that will be pulled apart and analyzed in bulk. The gene science community has made leaps and bounds in the last two decades since the start of the Human Genome Project and the discovery of techniques to rapidly sequence DNA, but it still has a long way to go.

The short description of the state of the art is that writing DNA is currently still pretty slow and wet and complicated. Modern biochemistry uses a term called an oligonucleotide, or an oligo, which is a short snippet of DNA or RNA. These oligos are up to a couple hundred bases in length, and usually represent a gene or a set of genes. They are designed and ordered from a few companies (Twist Bioscience and IDT are the big names right now), that essentially take a web form where you upload a text string, and they grow the oligo base by base onto a glass or silicon etched array. A chemical reaction gets the first nucleotide to bind to the substrate, and then there’s a process of heating, exposing to the next base, and cooling to get the next base to bind to the next rung in the ladder. This is repeated until the oligo is complete, which can take some time. This article from Twist is probably the most accessible explanation of the process.

The big advances in the past decade have been in automating this process for ever smaller amounts of liquid, smaller wells, and faster cycles. In 2019 a company called Catalog was able to achieve write speeds of 4 megabits per second using essentially an inkjet printer to deposit bases.

Once the oligo generation is done, the scientists have a whole bunch of the same oligo. They typically then perform PCR on it, which is essentially a DNA photocopier machine that rapidly replicates DNA by using an enzyme to convince it to split in half in a juice of bases so that the halves become full strands again, then repeats over and over, a topic we’ve written more deeply on in the past. The other process they do is CRISPR-Cas9, which allows them to take full genomes and cut them in specific locations and splice in the oligos or do other editing.

The good news is that reading this DNA is significantly faster. We used to use the Sanger method of sequencing, which uses fluorescent dyes attached to bases to determine the next base, but the newest hotness is Illumina dye sequencing, which also uses fluorescent dyes, but in parallel instead of serial. Understanding how either of them works broke me, but the gist is that Illumina is way faster and cheaper (relatively speaking) than Sanger. Both are very wet, though, and require lots of chemicals and copies of the strand to be sequenced.

IO Operations and File Structure

It’s shortsighted to think that we’ll always need wet labs and PCR to read and write DNA. The room-sized machines that stored data on magnetic tapes were just as amazing 50 years ago as the room-sized machines that are reading and writing data on DNA now. The problem is that the number of steps and chemical reactions required with DNA operations is significantly higher than magnetic tape. A completely new method of reading and writing will need to be discovered before it can be practical and miniaturized, and when it is and we have the ability to read and write individual molecules at rapid rates, the structure of DNA may not be the best way to do it. All of the advantages of DNA storage are eliminated if the IO requires complicated and expensive machinery.

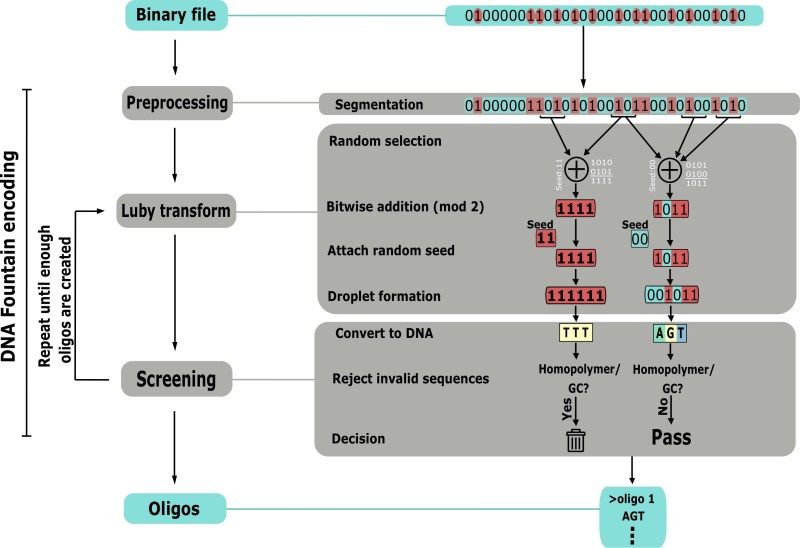

Understanding and organizing the data is another issue because of the fragility of DNA. One group has come up with a method of storing data called DNA Fountain, which nears the theoretical maximum amount of information. It also takes into account oligo length limits, base pair percentages (can’t have too many GCs to ATs), and long strings of the same base (AAAAAAAAA). The coding structure builds a large number of 38 byte payloads that contain the data, Reed-Solomon error correcting code, and 4 bytes of a random number generator seed that sort of converts into an index, which are turned into oligos. This pile of oligos can then be replicated and sequenced to extract the data and decode it. Current methods of reading and writing DNA aren’t working on full strands and chromosomes; they have tiny little chunks of DNA oligos. Mix two batches of oligos and you may not be able to get your original data back.

To summarize, building a cell that contains the data you want requires using DNA Fountain to encode the oligos, synthesizing the oligos, using CRISPR-Cas9 to insert the oligos into functional DNA, and then embedding that DNA into a cell. It’s not impossible, but it requires a boatload of expensive equipment.

Backups

Once you’ve settled on DNA as your storage medium, making backups becomes possibly the easiest part of the whole thing, and you can do it right now on the cheap with the PocketPCR thermal cycler. It may make more sense to keep the DNA in cells, though, because they have built in mechanisms for reading and writing the data and making copies, and they protect the DNA. It means some of the DNA must be dedicated to this cell structure, but consider that to be similar to the overhead like you would already have for a filesystem. Having DNA in cells, and specifically in bacteria, means making backups is as easy as not washing your hands after going to the bathroom. In this case, DNA stands for “Dat’s Nasty, Alright?”

Longevity

DNA has been successfully sequenced from a horse that lived roughly 700,000 years ago. With that kind of retention possibility, we can be confident that our backups of the Windows ME ISO could last far beyond a computers’ ability to run it, and our tweet history will baffle anthropologists for millennia. Of course, we don’t know if this kind of longevity can be exceeded using existing technology, but some research has been done to figure out how to make sure the DNA doesn’t break down much sooner than that.

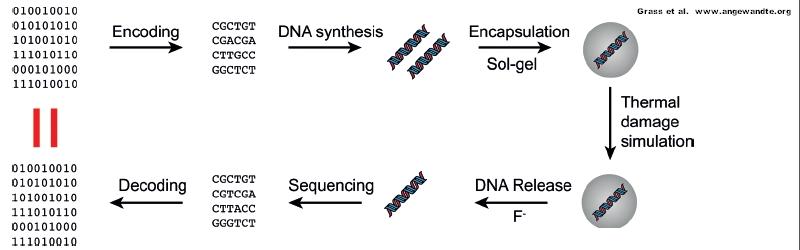

DNA stands for Denatures Near Jalapenos (the J is silent), though, and unravels when things get hot, between 70-100C. That makes it hardly better than regular electronic devices, and it’s got the extra vulnerability to UV light as well. To get around these problems, the DNA can be encased in silica and titanium dioxide. The neat thing is that DNA isn’t really harmed by some organic solvents, so it’s possible to dissolve away the silica and extract the DNA relatively easily. These extra steps make it unsuitable for rapid data access, though, and makes duplication impossible while encapsulated, so for short term storage the DNA still has to be exposed.

Conclusions

DNA, short for Discovering New Acronyms, is a data storage technique developed over hundreds of millions of years, and it’s very good at what it does. Electronics developed by humans pale in comparison to the capabilities of cells in many ways, but excel in others. Will it be possible to bridge the gap and use aspects of each to create even better machines? Considering the timeline of progress, we’re much closer now to mastering that bridge than we were when the structure of DNA was discovered in 1952 and the transistor was invented in 1947, and we will likely be able to miniaturize and speed up the interface significantly further in the decades ahead, to the point of being commercially viable. We may see a new Moore’s Law emerge with respect to interfacing molecular data storage.

In other words, this could become a real thing. At the moment the process of writing and reading DNA is way too slow and requires too many chemical processes and reactions, but it’s commonplace enough that people are now regularly doing fun things like embedding videos in DNA and embedding the gcode in 3D printed rabbits.

A completely new paradigm of IO is necessary to make it work. It’s possible DNA isn’t the best way to do it in favor of another molecular storage mechanism that’s more silicon friendly. What evidence of our civilization do you think will survive tens of millions of years? The only real evidence left over may just be our fossils and our DNA, which makes me wonder if maybe the common cold is an encoded video of a dinosaur dancing that went viral in more ways than one.

No comments:

Post a Comment