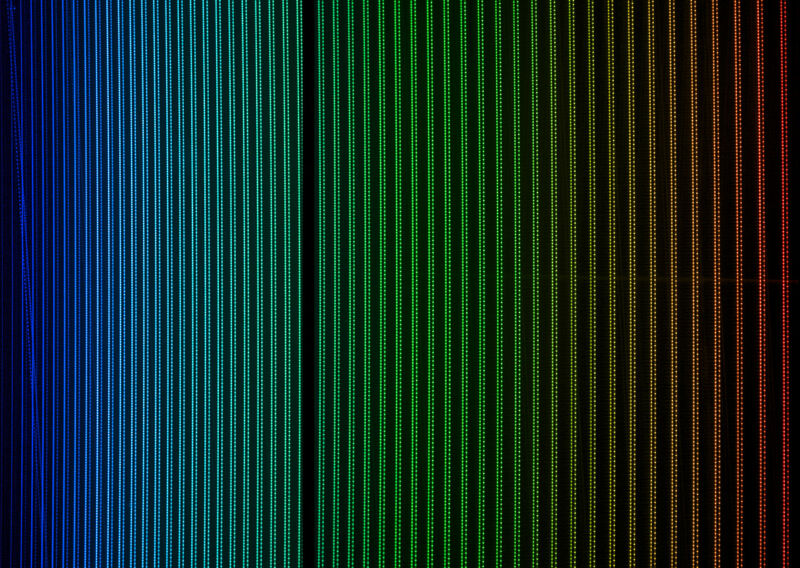

Enlarge / The output of two optical frequency combs, showing the light appearing at evenly spaced wavelengths. (credit: ESO)

AI and machine-learning techniques have become a major focus of everything from cloud computing services to cell phone manufacturers. Unfortunately, our existing processors are a bad match for the sort of algorithms that many of these techniques are based on, in part because they require frequent round trips between the processor and memory. To deal with this bottleneck, researchers have figured out how to perform calculations in memory and designed chips where each processing unit has a bit of memory attached.

Now, two different teams of researchers have figured out ways of performing calculations with light in a way that both merges memory and calculations and allows for massive parallelism. Despite the differences in implementation, the hardware designed by these teams has a common feature: it allows the same piece of hardware to simultaneously perform different calculations using different frequencies of light. While they're not yet at the level of performance of some dedicated processors, the approach can scale easily and can be implemented using on-chip hardware, raising the prospect of using it as a dedicated co-processor.

A fine-toothed comb

The new work relies on hardware called a frequency comb, a technology that won some of its creators the 2005 Nobel Prize in Physics. While a lot of interesting physics is behind how the combs work (which you can read more about here), what we care about is the outcome of that physics. While there are several ways to produce a frequency comb, they all produce the same thing: a beam of light that is composed of evenly spaced frequencies. So a frequency comb in visible wavelengths might be composed of light with a wavelength of 500 nanometers, 510nm, 520nm, and so on.

No comments:

Post a Comment